Instant Fusion Tracker

| Related documentations |

|---|

| Tracker Coordinate System in Unity |

The Instant Tracker instantly scans the planar surface in the camera frame and recognizes the space with sensors. You can find the rendered 3D object on the space.

After target recognition and initial poses are acquired through the MAXST SDK, use ARCore/ARKit for tracking.

※To use the AR Core / AR Kit, you must enter the actual size. (See Start / Stop Tracker)

The biggest difference from the existing Instant Tracker is that the existing Instant Tracker tracks through the frame input from the RGB camera. Due to the nature of RGB cameras, tracking will be lost if the target deviates from the camera frame or if there are few feature points. Instant Fusion Tracker, on the other hand, tracks through the AR Core/AR Kit, which allows the target to deviate from the camera frame or keep the feature point at least without tracking, due to the nature of learning the environment in which the current target lies.

Make Instant Fusion Tracker Scene

Start / Stop Tracker

Use Tracking Information

GitHub Unity Scene Example

Hardware Requirements for Android

Make Instant Fusion Tracker Scene

[Install MAXST AR SDK for Unity.][Install MAXST AR SDK for Unity]

Create a new scene.

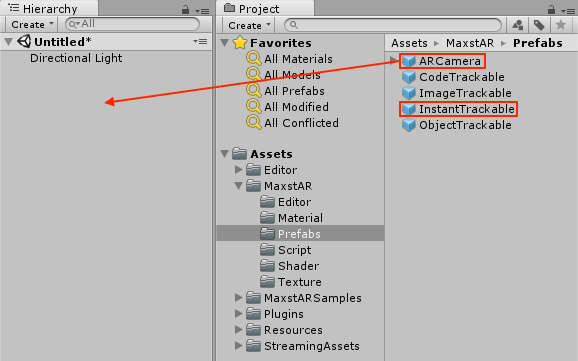

Delete the Main Camera that exists by default and add 'Assets > MaxstAR > Prefabs > ARCamera, InstantTrackable' to the scene.

※ If you build an application, you must add a License Key to ARCamera.

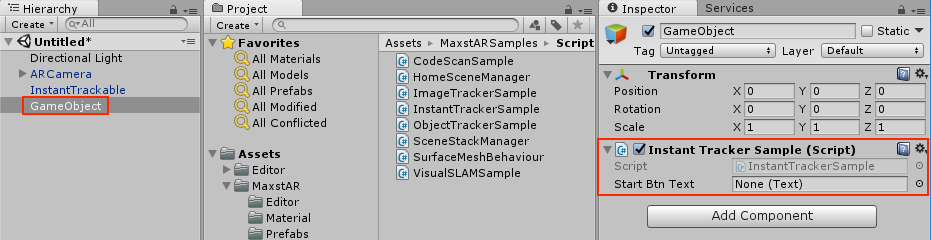

Create an empty object and add 'Assets > MaxstARSamples > Scripts > InstantFusionTrackerSample' as a component.

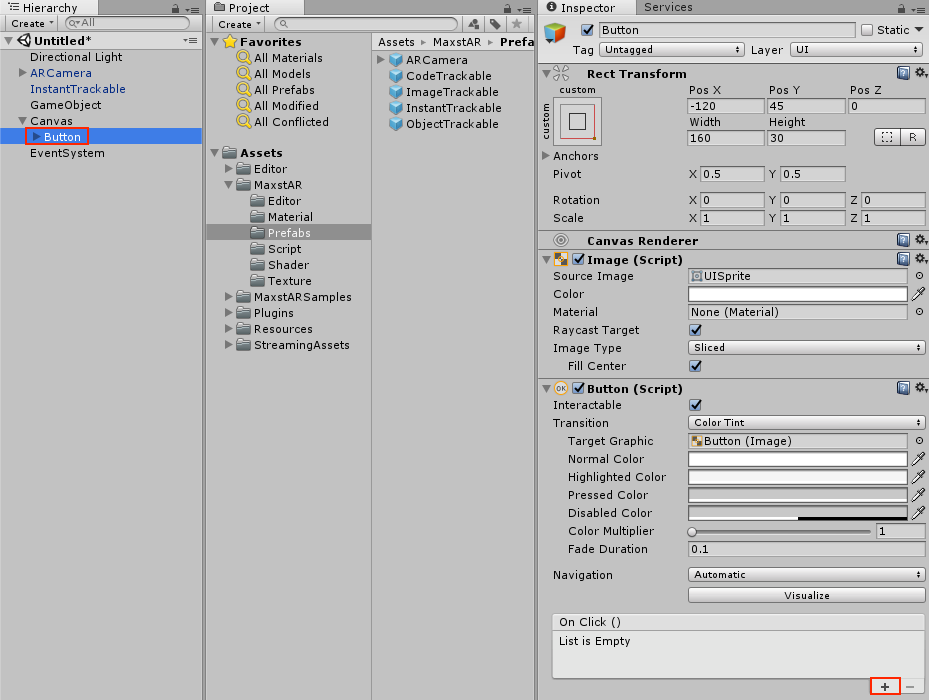

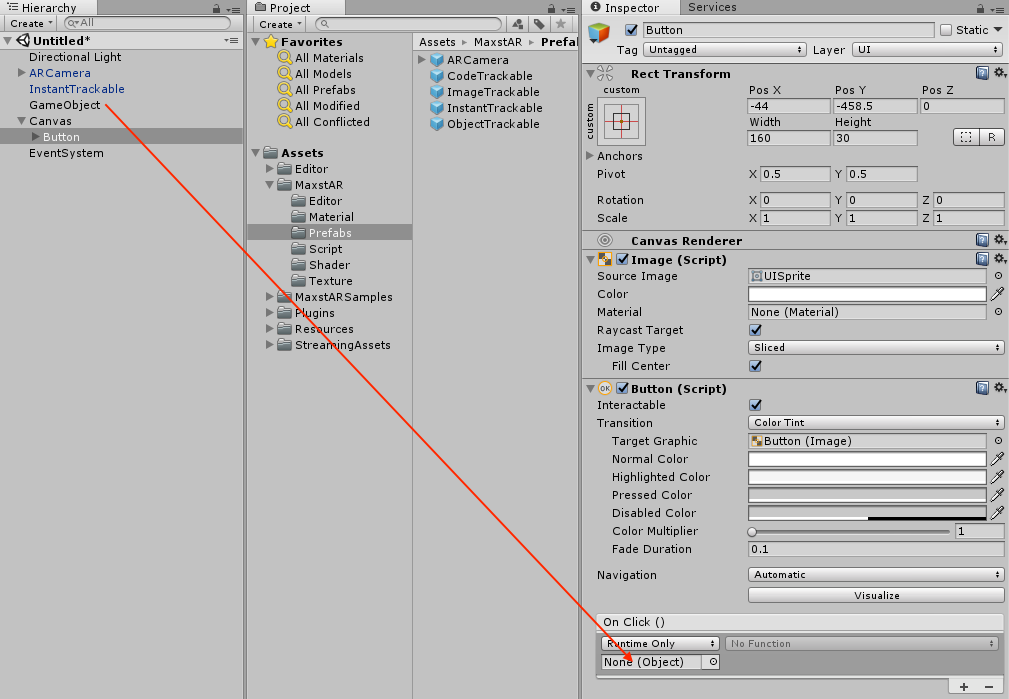

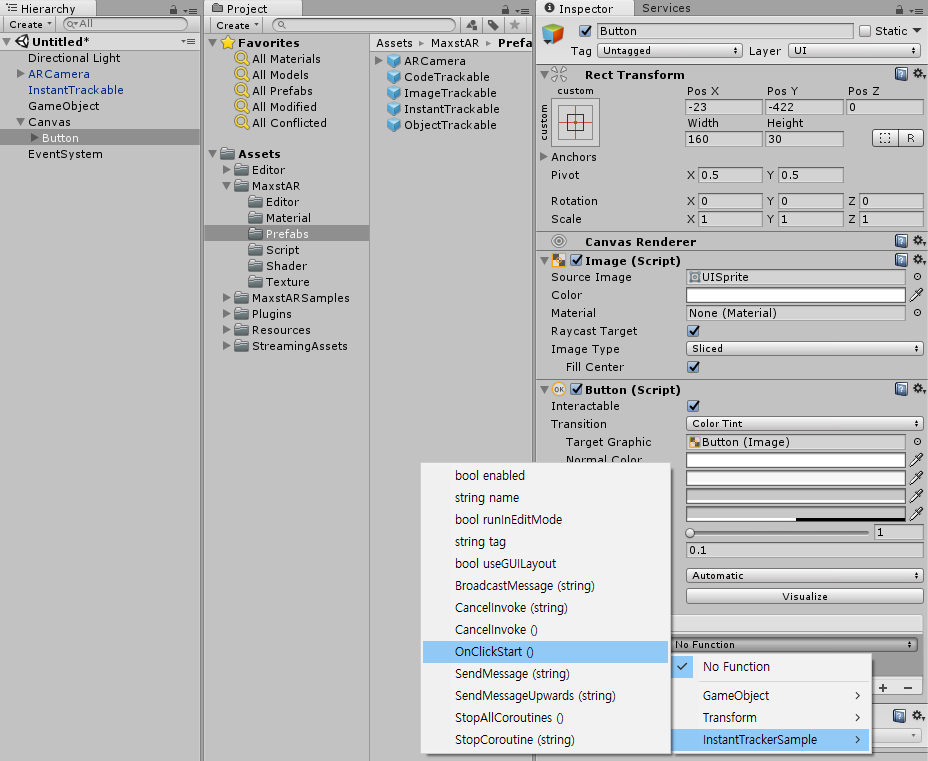

Create a Button, place it in the proper location, and register the OnClickStart () function of the InstantFusionTrackerSample script in the Click Event.

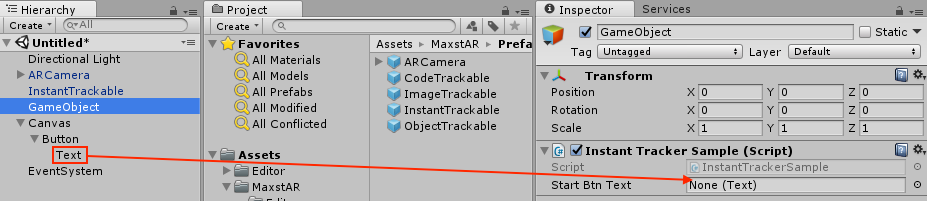

Drag the Text of the Button to the Start Btn Text of the Instant FusionTracker Sample script.

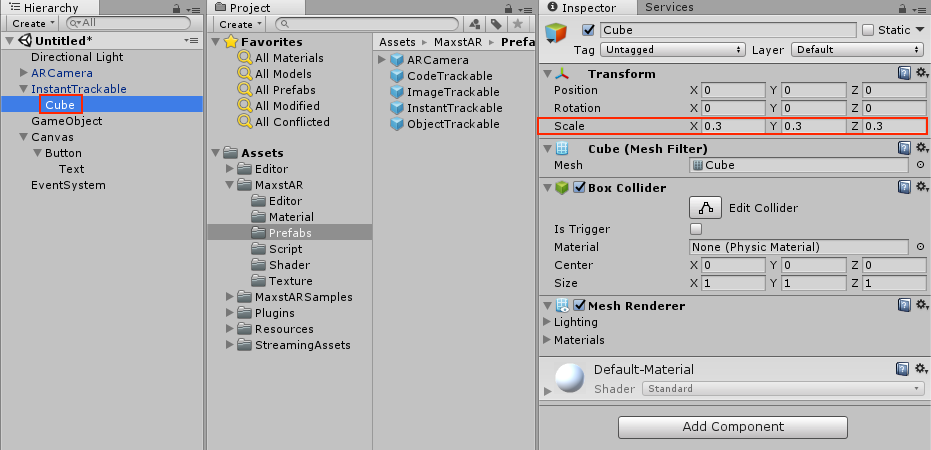

After creating a cube as a child of InstantTrakable, adjust the scale between 0.1 and 0.3.

After Playing, click the button to learn the space instantly and augment the cube.

Start / Stop Tracker

TrackerManager.getInstance().IsFusionSupported ()

This function checks whether or not your device supports Fusion.

Return value is bool type. If true, it supports the device in use. If it is false, it does not support the device.

TrackerManager.getInstance().GetFusionTrackingState ()

Pass the tracking status of the current Fusion.

The return value is an int of -1, which means that tracking isn't working properly, and 1 means that it's working properly.

To start / stop Tracker, refer to the following code.

>InstantFusionTrackerSample.cs

void Update()

{

...

CameraDevice.GetInstance().SetARCoreTexture();

TrackerManager.GetInstance().StartTracker(TrackerManager.TRACKER_TYPE_INSTANT_FUSION);

SensorDevice.GetInstance().Start();

...

}

void OnApplicationPause(bool pause)

{

...

SensorDevice.GetInstance().Stop();

TrackerManager.GetInstance().StopTracker();

...

}

void OnDestroy()

{

SensorDevice.GetInstance().Stop();

TrackerManager.GetInstance().StopTracker();

TrackerManager.GetInstance().DestroyTracker();

}

Use Tracking Information

To use the Tracking information, refer to the following code.

>InstantFusionTrackerSample.cs

void Update()

{

...

TrackingState state = TrackerManager.GetInstance().UpdateTrackingState();

TrackingResult trackingResult = state.GetTrackingResult();

instantTrackable.OnTrackFail();

if (trackingResult.GetCount() == 0)

{

return;

}

if (Input.touchCount > 0)

{

UpdateTouchDelta(Input.GetTouch(0).position);

}

Trackable trackable = trackingResult.GetTrackable(0);

Matrix4x4 poseMatrix = trackable.GetPose() * Matrix4x4.Translate(touchSumPosition);

instantTrackable.OnTrackSuccess(trackable.GetId(), trackable.GetName(), poseMatrix);

}

GitHub Unity Scene Example

GitHub Unity Scene Example: https://github.com/maxstdev/MaxstARSDK_Unity_Sample.git

- ExtraInstantTrackerBrush

- ExtraInstantTrackerGrid

- ExtraInstantTrackerMultiContents

Hardware Requirements for Android

MAXST AR SDK's Instant Fusion Tracker uses internally Android Rotation Vector related to both gyro and compass sensors.

If your target device has no gyro or compass sensor, the engine can not find the initial pose. If there is no sensor value, our engine assumes the front scene as a ground plane.

If you want to check whether the current device support Android Rotation Vector, please refer to the following codes.

SensorManager mSensorManager = (SensorManager) getSystemService(SENSOR_SERVICE);

List<Sensor> sensors = mSensorManager.getSensorList(TYPE_ROTATION_VECTOR);

Log.i(TAG, "# sensor : " + sensors.size());

for(int i=0; i<sensors.size(); i++)

{

Log.i(TAG, sensors.get(i).getName());

}

if(sensors.isEmpty())

{

Log.i(TAG,"There is no Rotaion Vector");

}

Devices that do not support the Android Rotation Vector

Samsung Galaxy J5, Moto G5 Plus, Moto G4 Plus, Iball Andi 5U, Geonee S +